Lời mở đầu

Bài toán phân loại chó mèo là bài toán khá cũ tại thời điểm hiện tại. Tuy nhiên, đối với các bạn mới bước chân vào con đường machine learning thì đây là một trong những bài toán cơ bản để các bạn thực hành sử dụng và tìm hiểu thư viện mà mình đang có. Ở đây, chúng ta sẽ sử dụng pretrain model có sẵn của kares áp dụng trên tập dữ liệu. Các bạn có thể download tập dữ liệu train và test ở địa chỉ https://www.kaggle.com/c/dogs-vs-cats/download/train.zip và https://www.kaggle.com/c/dogs-vs-cats/download/test1.zip để bắt đầu thực hiện.

Thực hiện

Sau khi giải nén dữ liệu, ta thấy rằng thư mục train có cấu trúc đặt trên sẽ là label.số thứ tự.jpg. Trong đó label có thể là dog hoặc cat, số thứ tự tăng dần từ 0 đến …. 12499. Để đảm bảo đúng với mô hình, ta phải cấu trúc lại dữ liệu thành dạng.

1data_dir/classname1/*.*

2data_dir/classname2/*.*

3...

Vì vậy, ta tạo ra thư mục cat và copy những file bắt đầu bằng cat.* vào thư mục cat. Làm tương tự với thư mục dog.

Đầu tiên, các bạn download file pretrain model, giải nén ra và để ở đâu đó trong ổ cứng của máy bạn. Đường dẫn file pretrain model các bạn có thể download ở http://download.tensorflow.org/models/object_detection/mask_rcnn_inception_v2_coco_2018_01_28.tar.gz. Các bạn có thể download các file pretrain khác nếu có hứng thú tìm hiểu.

Tiếp theo, chúng ta sẽ load dataset lên và tranform nó để đưa vào huấn luyện.

1import sys

2import os

3from collections import defaultdict

4import numpy as np

5import scipy.misc

6

7

8def preprocess_input(x0):

9 x = x0 / 255.

10 x -= 0.5

11 x *= 2.

12 return x

13

14

15def reverse_preprocess_input(x0):

16 x = x0 / 2.0

17 x += 0.5

18 x *= 255.

19 return x

20

21

22def dataset(base_dir, n):

23 print("base dir: "+base_dir)

24 print("n: "+str(n))

25 n = int(n)

26 d = defaultdict(list)

27 for root, subdirs, files in os.walk(base_dir):

28 for filename in files:

29 file_path = os.path.join(root, filename)

30 assert file_path.startswith(base_dir)

31

32 suffix = file_path[len(base_dir):]

33

34 suffix = suffix.lstrip("/")

35 suffix = suffix.lstrip("\\")

36 if(suffix.find('/')>-1): #linux

37 label = suffix.split("/")[0]

38 else: #window

39 label = suffix.split("\\")[0]

40 d[label].append(file_path)

41 print("walk directory complete")

42 tags = sorted(d.keys())

43

44 processed_image_count = 0

45 useful_image_count = 0

46

47 X = []

48 y = []

49

50 for class_index, class_name in enumerate(tags):

51 filenames = d[class_name]

52 for filename in filenames:

53 processed_image_count += 1

54 if processed_image_count%100 ==0:

55 print(class_name+"\tprocess: "+str(processed_image_count)+"\t"+str(len(d[class_name])))

56 img = scipy.misc.imread(filename)

57 height, width, chan = img.shape

58 assert chan == 3

59 aspect_ratio = float(max((height, width))) / min((height, width))

60 if aspect_ratio > 2:

61 continue

62 # We pick the largest center square.

63 centery = height // 2

64 centerx = width // 2

65 radius = min((centerx, centery))

66 img = img[centery-radius:centery+radius, centerx-radius:centerx+radius]

67 img = scipy.misc.imresize(img, size=(n, n), interp='bilinear')

68 X.append(img)

69 y.append(class_index)

70 useful_image_count += 1

71 print("processed %d, used %d" % (processed_image_count, useful_image_count))

72

73 X = np.array(X).astype(np.float32)

74 #X = X.transpose((0, 3, 1, 2))

75 X = preprocess_input(X)

76 y = np.array(y)

77

78 perm = np.random.permutation(len(y))

79 X = X[perm]

80 y = y[perm]

81

82 print("classes:",end=" ")

83 for class_index, class_name in enumerate(tags):

84 print(class_name, sum(y==class_index),end=" ")

85 print("X shape: ",X.shape)

86

87 return X, y, tags

Đoạn code trên khá đơn giản và dễ hiểu. Lưu ý ở đây là với những bức ảnh có tỷ lệ width và height > 2 thì mình sẽ loại chúng ra khỏi tập dữ liệu.

Tiếp theo, chúng ta sẽ xây dựng mô hình dựa trên mô hình InceptionV3 có sẵn, thêm một lớp softmax ở cuối để phân lớp dữ liệu, chúng ta sẽ huấn luyện lớp softmax này. Các lớp trước lớp softmax này sẽ bị đóng băng (không cập nhật trọng số trong quá trình huấn luyện ).

1

2# create the base pre-trained model

3def build_model(nb_classes):

4 base_model = InceptionV3(weights='imagenet', include_top=False)

5

6 # add a global spatial average pooling layer

7 x = base_model.output

8 x = GlobalAveragePooling2D()(x)

9 # let's add a fully-connected layer

10 x = Dense(1024, activation='relu')(x)

11 # and a logistic layer

12 predictions = Dense(nb_classes, activation='softmax')(x)

13

14 # this is the model we will train

15 model = Model(inputs=base_model.input, outputs=predictions)

16

17 # first: train only the top layers (which were randomly initialized)

18 # i.e. freeze all convolutional InceptionV3 layers

19 for layer in base_model.layers:

20 layer.trainable = False

21

22 # compile the model (should be done *after* setting layers to non-trainable)

23 print("starting model compile")

24 compile(model)

25 print("model compile done")

26 return model

Visualize một chút xíu về kiến trúc inceptionV3 mình đang dùng.

1__________________________________________________________________________________________________

2Layer (type) Output Shape Param # Connected to

3==================================================================================================

4input_1 (InputLayer) (None, None, None, 3 0

5__________________________________________________________________________________________________

6conv2d_1 (Conv2D) (None, None, None, 3 864 input_1[0][0]

7__________________________________________________________________________________________________

8batch_normalization_1 (BatchNor (None, None, None, 3 96 conv2d_1[0][0]

9__________________________________________________________________________________________________

10activation_1 (Activation) (None, None, None, 3 0 batch_normalization_1[0][0]

11__________________________________________________________________________________________________

12conv2d_2 (Conv2D) (None, None, None, 3 9216 activation_1[0][0]

13__________________________________________________________________________________________________

14batch_normalization_2 (BatchNor (None, None, None, 3 96 conv2d_2[0][0]

15__________________________________________________________________________________________________

16activation_2 (Activation) (None, None, None, 3 0 batch_normalization_2[0][0]

17__________________________________________________________________________________________________

18conv2d_3 (Conv2D) (None, None, None, 6 18432 activation_2[0][0]

19__________________________________________________________________________________________________

20batch_normalization_3 (BatchNor (None, None, None, 6 192 conv2d_3[0][0]

21__________________________________________________________________________________________________

22activation_3 (Activation) (None, None, None, 6 0 batch_normalization_3[0][0]

23__________________________________________________________________________________________________

24max_pooling2d_1 (MaxPooling2D) (None, None, None, 6 0 activation_3[0][0]

25__________________________________________________________________________________________________

26conv2d_4 (Conv2D) (None, None, None, 8 5120 max_pooling2d_1[0][0]

27__________________________________________________________________________________________________

28batch_normalization_4 (BatchNor (None, None, None, 8 240 conv2d_4[0][0]

29__________________________________________________________________________________________________

30activation_4 (Activation) (None, None, None, 8 0 batch_normalization_4[0][0]

31__________________________________________________________________________________________________

32conv2d_5 (Conv2D) (None, None, None, 1 138240 activation_4[0][0]

33__________________________________________________________________________________________________

34batch_normalization_5 (BatchNor (None, None, None, 1 576 conv2d_5[0][0]

35__________________________________________________________________________________________________

36activation_5 (Activation) (None, None, None, 1 0 batch_normalization_5[0][0]

37__________________________________________________________________________________________________

38max_pooling2d_2 (MaxPooling2D) (None, None, None, 1 0 activation_5[0][0]

39__________________________________________________________________________________________________

40conv2d_9 (Conv2D) (None, None, None, 6 12288 max_pooling2d_2[0][0]

41__________________________________________________________________________________________________

42batch_normalization_9 (BatchNor (None, None, None, 6 192 conv2d_9[0][0]

43__________________________________________________________________________________________________

44activation_9 (Activation) (None, None, None, 6 0 batch_normalization_9[0][0]

45__________________________________________________________________________________________________

46conv2d_7 (Conv2D) (None, None, None, 4 9216 max_pooling2d_2[0][0]

47__________________________________________________________________________________________________

48conv2d_10 (Conv2D) (None, None, None, 9 55296 activation_9[0][0]

49__________________________________________________________________________________________________

50batch_normalization_7 (BatchNor (None, None, None, 4 144 conv2d_7[0][0]

51__________________________________________________________________________________________________

52batch_normalization_10 (BatchNo (None, None, None, 9 288 conv2d_10[0][0]

53__________________________________________________________________________________________________

54activation_7 (Activation) (None, None, None, 4 0 batch_normalization_7[0][0]

55__________________________________________________________________________________________________

56activation_10 (Activation) (None, None, None, 9 0 batch_normalization_10[0][0]

57__________________________________________________________________________________________________

58average_pooling2d_1 (AveragePoo (None, None, None, 1 0 max_pooling2d_2[0][0]

59__________________________________________________________________________________________________

60conv2d_6 (Conv2D) (None, None, None, 6 12288 max_pooling2d_2[0][0]

61__________________________________________________________________________________________________

62conv2d_8 (Conv2D) (None, None, None, 6 76800 activation_7[0][0]

63__________________________________________________________________________________________________

64conv2d_11 (Conv2D) (None, None, None, 9 82944 activation_10[0][0]

65__________________________________________________________________________________________________

66conv2d_12 (Conv2D) (None, None, None, 3 6144 average_pooling2d_1[0][0]

67__________________________________________________________________________________________________

68batch_normalization_6 (BatchNor (None, None, None, 6 192 conv2d_6[0][0]

69__________________________________________________________________________________________________

70batch_normalization_8 (BatchNor (None, None, None, 6 192 conv2d_8[0][0]

71__________________________________________________________________________________________________

72batch_normalization_11 (BatchNo (None, None, None, 9 288 conv2d_11[0][0]

73__________________________________________________________________________________________________

74batch_normalization_12 (BatchNo (None, None, None, 3 96 conv2d_12[0][0]

75__________________________________________________________________________________________________

76activation_6 (Activation) (None, None, None, 6 0 batch_normalization_6[0][0]

77__________________________________________________________________________________________________

78activation_8 (Activation) (None, None, None, 6 0 batch_normalization_8[0][0]

79__________________________________________________________________________________________________

80activation_11 (Activation) (None, None, None, 9 0 batch_normalization_11[0][0]

81__________________________________________________________________________________________________

82activation_12 (Activation) (None, None, None, 3 0 batch_normalization_12[0][0]

83__________________________________________________________________________________________________

84mixed0 (Concatenate) (None, None, None, 2 0 activation_6[0][0]

85 activation_8[0][0]

86 activation_11[0][0]

87 activation_12[0][0]

88__________________________________________________________________________________________________

89conv2d_16 (Conv2D) (None, None, None, 6 16384 mixed0[0][0]

90__________________________________________________________________________________________________

91batch_normalization_16 (BatchNo (None, None, None, 6 192 conv2d_16[0][0]

92__________________________________________________________________________________________________

93activation_16 (Activation) (None, None, None, 6 0 batch_normalization_16[0][0]

94__________________________________________________________________________________________________

95conv2d_14 (Conv2D) (None, None, None, 4 12288 mixed0[0][0]

96__________________________________________________________________________________________________

97conv2d_17 (Conv2D) (None, None, None, 9 55296 activation_16[0][0]

98__________________________________________________________________________________________________

99batch_normalization_14 (BatchNo (None, None, None, 4 144 conv2d_14[0][0]

100__________________________________________________________________________________________________

101batch_normalization_17 (BatchNo (None, None, None, 9 288 conv2d_17[0][0]

102__________________________________________________________________________________________________

103activation_14 (Activation) (None, None, None, 4 0 batch_normalization_14[0][0]

104__________________________________________________________________________________________________

105activation_17 (Activation) (None, None, None, 9 0 batch_normalization_17[0][0]

106__________________________________________________________________________________________________

107average_pooling2d_2 (AveragePoo (None, None, None, 2 0 mixed0[0][0]

108__________________________________________________________________________________________________

109conv2d_13 (Conv2D) (None, None, None, 6 16384 mixed0[0][0]

110__________________________________________________________________________________________________

111conv2d_15 (Conv2D) (None, None, None, 6 76800 activation_14[0][0]

112__________________________________________________________________________________________________

113conv2d_18 (Conv2D) (None, None, None, 9 82944 activation_17[0][0]

114__________________________________________________________________________________________________

115conv2d_19 (Conv2D) (None, None, None, 6 16384 average_pooling2d_2[0][0]

116__________________________________________________________________________________________________

117batch_normalization_13 (BatchNo (None, None, None, 6 192 conv2d_13[0][0]

118__________________________________________________________________________________________________

119batch_normalization_15 (BatchNo (None, None, None, 6 192 conv2d_15[0][0]

120__________________________________________________________________________________________________

121batch_normalization_18 (BatchNo (None, None, None, 9 288 conv2d_18[0][0]

122__________________________________________________________________________________________________

123batch_normalization_19 (BatchNo (None, None, None, 6 192 conv2d_19[0][0]

124__________________________________________________________________________________________________

125activation_13 (Activation) (None, None, None, 6 0 batch_normalization_13[0][0]

126__________________________________________________________________________________________________

127activation_15 (Activation) (None, None, None, 6 0 batch_normalization_15[0][0]

128__________________________________________________________________________________________________

129activation_18 (Activation) (None, None, None, 9 0 batch_normalization_18[0][0]

130__________________________________________________________________________________________________

131activation_19 (Activation) (None, None, None, 6 0 batch_normalization_19[0][0]

132__________________________________________________________________________________________________

133mixed1 (Concatenate) (None, None, None, 2 0 activation_13[0][0]

134 activation_15[0][0]

135 activation_18[0][0]

136 activation_19[0][0]

137__________________________________________________________________________________________________

138conv2d_23 (Conv2D) (None, None, None, 6 18432 mixed1[0][0]

139__________________________________________________________________________________________________

140batch_normalization_23 (BatchNo (None, None, None, 6 192 conv2d_23[0][0]

141__________________________________________________________________________________________________

142activation_23 (Activation) (None, None, None, 6 0 batch_normalization_23[0][0]

143__________________________________________________________________________________________________

144conv2d_21 (Conv2D) (None, None, None, 4 13824 mixed1[0][0]

145__________________________________________________________________________________________________

146conv2d_24 (Conv2D) (None, None, None, 9 55296 activation_23[0][0]

147__________________________________________________________________________________________________

148batch_normalization_21 (BatchNo (None, None, None, 4 144 conv2d_21[0][0]

149__________________________________________________________________________________________________

150batch_normalization_24 (BatchNo (None, None, None, 9 288 conv2d_24[0][0]

151__________________________________________________________________________________________________

152activation_21 (Activation) (None, None, None, 4 0 batch_normalization_21[0][0]

153__________________________________________________________________________________________________

154activation_24 (Activation) (None, None, None, 9 0 batch_normalization_24[0][0]

155__________________________________________________________________________________________________

156average_pooling2d_3 (AveragePoo (None, None, None, 2 0 mixed1[0][0]

157__________________________________________________________________________________________________

158conv2d_20 (Conv2D) (None, None, None, 6 18432 mixed1[0][0]

159__________________________________________________________________________________________________

160conv2d_22 (Conv2D) (None, None, None, 6 76800 activation_21[0][0]

161__________________________________________________________________________________________________

162conv2d_25 (Conv2D) (None, None, None, 9 82944 activation_24[0][0]

163__________________________________________________________________________________________________

164conv2d_26 (Conv2D) (None, None, None, 6 18432 average_pooling2d_3[0][0]

165__________________________________________________________________________________________________

166batch_normalization_20 (BatchNo (None, None, None, 6 192 conv2d_20[0][0]

167__________________________________________________________________________________________________

168batch_normalization_22 (BatchNo (None, None, None, 6 192 conv2d_22[0][0]

169__________________________________________________________________________________________________

170batch_normalization_25 (BatchNo (None, None, None, 9 288 conv2d_25[0][0]

171__________________________________________________________________________________________________

172batch_normalization_26 (BatchNo (None, None, None, 6 192 conv2d_26[0][0]

173__________________________________________________________________________________________________

174activation_20 (Activation) (None, None, None, 6 0 batch_normalization_20[0][0]

175__________________________________________________________________________________________________

176activation_22 (Activation) (None, None, None, 6 0 batch_normalization_22[0][0]

177__________________________________________________________________________________________________

178activation_25 (Activation) (None, None, None, 9 0 batch_normalization_25[0][0]

179__________________________________________________________________________________________________

180activation_26 (Activation) (None, None, None, 6 0 batch_normalization_26[0][0]

181__________________________________________________________________________________________________

182mixed2 (Concatenate) (None, None, None, 2 0 activation_20[0][0]

183 activation_22[0][0]

184 activation_25[0][0]

185 activation_26[0][0]

186__________________________________________________________________________________________________

187conv2d_28 (Conv2D) (None, None, None, 6 18432 mixed2[0][0]

188__________________________________________________________________________________________________

189batch_normalization_28 (BatchNo (None, None, None, 6 192 conv2d_28[0][0]

190__________________________________________________________________________________________________

191activation_28 (Activation) (None, None, None, 6 0 batch_normalization_28[0][0]

192__________________________________________________________________________________________________

193conv2d_29 (Conv2D) (None, None, None, 9 55296 activation_28[0][0]

194__________________________________________________________________________________________________

195batch_normalization_29 (BatchNo (None, None, None, 9 288 conv2d_29[0][0]

196__________________________________________________________________________________________________

197activation_29 (Activation) (None, None, None, 9 0 batch_normalization_29[0][0]

198__________________________________________________________________________________________________

199conv2d_27 (Conv2D) (None, None, None, 3 995328 mixed2[0][0]

200__________________________________________________________________________________________________

201conv2d_30 (Conv2D) (None, None, None, 9 82944 activation_29[0][0]

202__________________________________________________________________________________________________

203batch_normalization_27 (BatchNo (None, None, None, 3 1152 conv2d_27[0][0]

204__________________________________________________________________________________________________

205batch_normalization_30 (BatchNo (None, None, None, 9 288 conv2d_30[0][0]

206__________________________________________________________________________________________________

207activation_27 (Activation) (None, None, None, 3 0 batch_normalization_27[0][0]

208__________________________________________________________________________________________________

209activation_30 (Activation) (None, None, None, 9 0 batch_normalization_30[0][0]

210__________________________________________________________________________________________________

211max_pooling2d_3 (MaxPooling2D) (None, None, None, 2 0 mixed2[0][0]

212__________________________________________________________________________________________________

213mixed3 (Concatenate) (None, None, None, 7 0 activation_27[0][0]

214 activation_30[0][0]

215 max_pooling2d_3[0][0]

216__________________________________________________________________________________________________

217conv2d_35 (Conv2D) (None, None, None, 1 98304 mixed3[0][0]

218__________________________________________________________________________________________________

219batch_normalization_35 (BatchNo (None, None, None, 1 384 conv2d_35[0][0]

220__________________________________________________________________________________________________

221activation_35 (Activation) (None, None, None, 1 0 batch_normalization_35[0][0]

222__________________________________________________________________________________________________

223conv2d_36 (Conv2D) (None, None, None, 1 114688 activation_35[0][0]

224__________________________________________________________________________________________________

225batch_normalization_36 (BatchNo (None, None, None, 1 384 conv2d_36[0][0]

226__________________________________________________________________________________________________

227activation_36 (Activation) (None, None, None, 1 0 batch_normalization_36[0][0]

228__________________________________________________________________________________________________

229conv2d_32 (Conv2D) (None, None, None, 1 98304 mixed3[0][0]

230__________________________________________________________________________________________________

231conv2d_37 (Conv2D) (None, None, None, 1 114688 activation_36[0][0]

232__________________________________________________________________________________________________

233batch_normalization_32 (BatchNo (None, None, None, 1 384 conv2d_32[0][0]

234__________________________________________________________________________________________________

235batch_normalization_37 (BatchNo (None, None, None, 1 384 conv2d_37[0][0]

236__________________________________________________________________________________________________

237activation_32 (Activation) (None, None, None, 1 0 batch_normalization_32[0][0]

238__________________________________________________________________________________________________

239activation_37 (Activation) (None, None, None, 1 0 batch_normalization_37[0][0]

240__________________________________________________________________________________________________

241conv2d_33 (Conv2D) (None, None, None, 1 114688 activation_32[0][0]

242__________________________________________________________________________________________________

243conv2d_38 (Conv2D) (None, None, None, 1 114688 activation_37[0][0]

244__________________________________________________________________________________________________

245batch_normalization_33 (BatchNo (None, None, None, 1 384 conv2d_33[0][0]

246__________________________________________________________________________________________________

247batch_normalization_38 (BatchNo (None, None, None, 1 384 conv2d_38[0][0]

248__________________________________________________________________________________________________

249activation_33 (Activation) (None, None, None, 1 0 batch_normalization_33[0][0]

250__________________________________________________________________________________________________

251activation_38 (Activation) (None, None, None, 1 0 batch_normalization_38[0][0]

252__________________________________________________________________________________________________

253average_pooling2d_4 (AveragePoo (None, None, None, 7 0 mixed3[0][0]

254__________________________________________________________________________________________________

255conv2d_31 (Conv2D) (None, None, None, 1 147456 mixed3[0][0]

256__________________________________________________________________________________________________

257conv2d_34 (Conv2D) (None, None, None, 1 172032 activation_33[0][0]

258__________________________________________________________________________________________________

259conv2d_39 (Conv2D) (None, None, None, 1 172032 activation_38[0][0]

260__________________________________________________________________________________________________

261conv2d_40 (Conv2D) (None, None, None, 1 147456 average_pooling2d_4[0][0]

262__________________________________________________________________________________________________

263batch_normalization_31 (BatchNo (None, None, None, 1 576 conv2d_31[0][0]

264__________________________________________________________________________________________________

265batch_normalization_34 (BatchNo (None, None, None, 1 576 conv2d_34[0][0]

266__________________________________________________________________________________________________

267batch_normalization_39 (BatchNo (None, None, None, 1 576 conv2d_39[0][0]

268__________________________________________________________________________________________________

269batch_normalization_40 (BatchNo (None, None, None, 1 576 conv2d_40[0][0]

270__________________________________________________________________________________________________

271activation_31 (Activation) (None, None, None, 1 0 batch_normalization_31[0][0]

272__________________________________________________________________________________________________

273activation_34 (Activation) (None, None, None, 1 0 batch_normalization_34[0][0]

274__________________________________________________________________________________________________

275activation_39 (Activation) (None, None, None, 1 0 batch_normalization_39[0][0]

276__________________________________________________________________________________________________

277activation_40 (Activation) (None, None, None, 1 0 batch_normalization_40[0][0]

278__________________________________________________________________________________________________

279mixed4 (Concatenate) (None, None, None, 7 0 activation_31[0][0]

280 activation_34[0][0]

281 activation_39[0][0]

282 activation_40[0][0]

283__________________________________________________________________________________________________

284conv2d_45 (Conv2D) (None, None, None, 1 122880 mixed4[0][0]

285__________________________________________________________________________________________________

286batch_normalization_45 (BatchNo (None, None, None, 1 480 conv2d_45[0][0]

287__________________________________________________________________________________________________

288activation_45 (Activation) (None, None, None, 1 0 batch_normalization_45[0][0]

289__________________________________________________________________________________________________

290conv2d_46 (Conv2D) (None, None, None, 1 179200 activation_45[0][0]

291__________________________________________________________________________________________________

292batch_normalization_46 (BatchNo (None, None, None, 1 480 conv2d_46[0][0]

293__________________________________________________________________________________________________

294activation_46 (Activation) (None, None, None, 1 0 batch_normalization_46[0][0]

295__________________________________________________________________________________________________

296conv2d_42 (Conv2D) (None, None, None, 1 122880 mixed4[0][0]

297__________________________________________________________________________________________________

298conv2d_47 (Conv2D) (None, None, None, 1 179200 activation_46[0][0]

299__________________________________________________________________________________________________

300batch_normalization_42 (BatchNo (None, None, None, 1 480 conv2d_42[0][0]

301__________________________________________________________________________________________________

302batch_normalization_47 (BatchNo (None, None, None, 1 480 conv2d_47[0][0]

303__________________________________________________________________________________________________

304activation_42 (Activation) (None, None, None, 1 0 batch_normalization_42[0][0]

305__________________________________________________________________________________________________

306activation_47 (Activation) (None, None, None, 1 0 batch_normalization_47[0][0]

307__________________________________________________________________________________________________

308conv2d_43 (Conv2D) (None, None, None, 1 179200 activation_42[0][0]

309__________________________________________________________________________________________________

310conv2d_48 (Conv2D) (None, None, None, 1 179200 activation_47[0][0]

311__________________________________________________________________________________________________

312batch_normalization_43 (BatchNo (None, None, None, 1 480 conv2d_43[0][0]

313__________________________________________________________________________________________________

314batch_normalization_48 (BatchNo (None, None, None, 1 480 conv2d_48[0][0]

315__________________________________________________________________________________________________

316activation_43 (Activation) (None, None, None, 1 0 batch_normalization_43[0][0]

317__________________________________________________________________________________________________

318activation_48 (Activation) (None, None, None, 1 0 batch_normalization_48[0][0]

319__________________________________________________________________________________________________

320average_pooling2d_5 (AveragePoo (None, None, None, 7 0 mixed4[0][0]

321__________________________________________________________________________________________________

322conv2d_41 (Conv2D) (None, None, None, 1 147456 mixed4[0][0]

323__________________________________________________________________________________________________

324conv2d_44 (Conv2D) (None, None, None, 1 215040 activation_43[0][0]

325__________________________________________________________________________________________________

326conv2d_49 (Conv2D) (None, None, None, 1 215040 activation_48[0][0]

327__________________________________________________________________________________________________

328conv2d_50 (Conv2D) (None, None, None, 1 147456 average_pooling2d_5[0][0]

329__________________________________________________________________________________________________

330batch_normalization_41 (BatchNo (None, None, None, 1 576 conv2d_41[0][0]

331__________________________________________________________________________________________________

332batch_normalization_44 (BatchNo (None, None, None, 1 576 conv2d_44[0][0]

333__________________________________________________________________________________________________

334batch_normalization_49 (BatchNo (None, None, None, 1 576 conv2d_49[0][0]

335__________________________________________________________________________________________________

336batch_normalization_50 (BatchNo (None, None, None, 1 576 conv2d_50[0][0]

337__________________________________________________________________________________________________

338activation_41 (Activation) (None, None, None, 1 0 batch_normalization_41[0][0]

339__________________________________________________________________________________________________

340activation_44 (Activation) (None, None, None, 1 0 batch_normalization_44[0][0]

341__________________________________________________________________________________________________

342activation_49 (Activation) (None, None, None, 1 0 batch_normalization_49[0][0]

343__________________________________________________________________________________________________

344activation_50 (Activation) (None, None, None, 1 0 batch_normalization_50[0][0]

345__________________________________________________________________________________________________

346mixed5 (Concatenate) (None, None, None, 7 0 activation_41[0][0]

347 activation_44[0][0]

348 activation_49[0][0]

349 activation_50[0][0]

350__________________________________________________________________________________________________

351conv2d_55 (Conv2D) (None, None, None, 1 122880 mixed5[0][0]

352__________________________________________________________________________________________________

353batch_normalization_55 (BatchNo (None, None, None, 1 480 conv2d_55[0][0]

354__________________________________________________________________________________________________

355activation_55 (Activation) (None, None, None, 1 0 batch_normalization_55[0][0]

356__________________________________________________________________________________________________

357conv2d_56 (Conv2D) (None, None, None, 1 179200 activation_55[0][0]

358__________________________________________________________________________________________________

359batch_normalization_56 (BatchNo (None, None, None, 1 480 conv2d_56[0][0]

360__________________________________________________________________________________________________

361activation_56 (Activation) (None, None, None, 1 0 batch_normalization_56[0][0]

362__________________________________________________________________________________________________

363conv2d_52 (Conv2D) (None, None, None, 1 122880 mixed5[0][0]

364__________________________________________________________________________________________________

365conv2d_57 (Conv2D) (None, None, None, 1 179200 activation_56[0][0]

366__________________________________________________________________________________________________

367batch_normalization_52 (BatchNo (None, None, None, 1 480 conv2d_52[0][0]

368__________________________________________________________________________________________________

369batch_normalization_57 (BatchNo (None, None, None, 1 480 conv2d_57[0][0]

370__________________________________________________________________________________________________

371activation_52 (Activation) (None, None, None, 1 0 batch_normalization_52[0][0]

372__________________________________________________________________________________________________

373activation_57 (Activation) (None, None, None, 1 0 batch_normalization_57[0][0]

374__________________________________________________________________________________________________

375conv2d_53 (Conv2D) (None, None, None, 1 179200 activation_52[0][0]

376__________________________________________________________________________________________________

377conv2d_58 (Conv2D) (None, None, None, 1 179200 activation_57[0][0]

378__________________________________________________________________________________________________

379batch_normalization_53 (BatchNo (None, None, None, 1 480 conv2d_53[0][0]

380__________________________________________________________________________________________________

381batch_normalization_58 (BatchNo (None, None, None, 1 480 conv2d_58[0][0]

382__________________________________________________________________________________________________

383activation_53 (Activation) (None, None, None, 1 0 batch_normalization_53[0][0]

384__________________________________________________________________________________________________

385activation_58 (Activation) (None, None, None, 1 0 batch_normalization_58[0][0]

386__________________________________________________________________________________________________

387average_pooling2d_6 (AveragePoo (None, None, None, 7 0 mixed5[0][0]

388__________________________________________________________________________________________________

389conv2d_51 (Conv2D) (None, None, None, 1 147456 mixed5[0][0]

390__________________________________________________________________________________________________

391conv2d_54 (Conv2D) (None, None, None, 1 215040 activation_53[0][0]

392__________________________________________________________________________________________________

393conv2d_59 (Conv2D) (None, None, None, 1 215040 activation_58[0][0]

394__________________________________________________________________________________________________

395conv2d_60 (Conv2D) (None, None, None, 1 147456 average_pooling2d_6[0][0]

396__________________________________________________________________________________________________

397batch_normalization_51 (BatchNo (None, None, None, 1 576 conv2d_51[0][0]

398__________________________________________________________________________________________________

399batch_normalization_54 (BatchNo (None, None, None, 1 576 conv2d_54[0][0]

400__________________________________________________________________________________________________

401batch_normalization_59 (BatchNo (None, None, None, 1 576 conv2d_59[0][0]

402__________________________________________________________________________________________________

403batch_normalization_60 (BatchNo (None, None, None, 1 576 conv2d_60[0][0]

404__________________________________________________________________________________________________

405activation_51 (Activation) (None, None, None, 1 0 batch_normalization_51[0][0]

406__________________________________________________________________________________________________

407activation_54 (Activation) (None, None, None, 1 0 batch_normalization_54[0][0]

408__________________________________________________________________________________________________

409activation_59 (Activation) (None, None, None, 1 0 batch_normalization_59[0][0]

410__________________________________________________________________________________________________

411activation_60 (Activation) (None, None, None, 1 0 batch_normalization_60[0][0]

412__________________________________________________________________________________________________

413mixed6 (Concatenate) (None, None, None, 7 0 activation_51[0][0]

414 activation_54[0][0]

415 activation_59[0][0]

416 activation_60[0][0]

417__________________________________________________________________________________________________

418conv2d_65 (Conv2D) (None, None, None, 1 147456 mixed6[0][0]

419__________________________________________________________________________________________________

420batch_normalization_65 (BatchNo (None, None, None, 1 576 conv2d_65[0][0]

421__________________________________________________________________________________________________

422activation_65 (Activation) (None, None, None, 1 0 batch_normalization_65[0][0]

423__________________________________________________________________________________________________

424conv2d_66 (Conv2D) (None, None, None, 1 258048 activation_65[0][0]

425__________________________________________________________________________________________________

426batch_normalization_66 (BatchNo (None, None, None, 1 576 conv2d_66[0][0]

427__________________________________________________________________________________________________

428activation_66 (Activation) (None, None, None, 1 0 batch_normalization_66[0][0]

429__________________________________________________________________________________________________

430conv2d_62 (Conv2D) (None, None, None, 1 147456 mixed6[0][0]

431__________________________________________________________________________________________________

432conv2d_67 (Conv2D) (None, None, None, 1 258048 activation_66[0][0]

433__________________________________________________________________________________________________

434batch_normalization_62 (BatchNo (None, None, None, 1 576 conv2d_62[0][0]

435__________________________________________________________________________________________________

436batch_normalization_67 (BatchNo (None, None, None, 1 576 conv2d_67[0][0]

437__________________________________________________________________________________________________

438activation_62 (Activation) (None, None, None, 1 0 batch_normalization_62[0][0]

439__________________________________________________________________________________________________

440activation_67 (Activation) (None, None, None, 1 0 batch_normalization_67[0][0]

441__________________________________________________________________________________________________

442conv2d_63 (Conv2D) (None, None, None, 1 258048 activation_62[0][0]

443__________________________________________________________________________________________________

444conv2d_68 (Conv2D) (None, None, None, 1 258048 activation_67[0][0]

445__________________________________________________________________________________________________

446batch_normalization_63 (BatchNo (None, None, None, 1 576 conv2d_63[0][0]

447__________________________________________________________________________________________________

448batch_normalization_68 (BatchNo (None, None, None, 1 576 conv2d_68[0][0]

449__________________________________________________________________________________________________

450activation_63 (Activation) (None, None, None, 1 0 batch_normalization_63[0][0]

451__________________________________________________________________________________________________

452activation_68 (Activation) (None, None, None, 1 0 batch_normalization_68[0][0]

453__________________________________________________________________________________________________

454average_pooling2d_7 (AveragePoo (None, None, None, 7 0 mixed6[0][0]

455__________________________________________________________________________________________________

456conv2d_61 (Conv2D) (None, None, None, 1 147456 mixed6[0][0]

457__________________________________________________________________________________________________

458conv2d_64 (Conv2D) (None, None, None, 1 258048 activation_63[0][0]

459__________________________________________________________________________________________________

460conv2d_69 (Conv2D) (None, None, None, 1 258048 activation_68[0][0]

461__________________________________________________________________________________________________

462conv2d_70 (Conv2D) (None, None, None, 1 147456 average_pooling2d_7[0][0]

463__________________________________________________________________________________________________

464batch_normalization_61 (BatchNo (None, None, None, 1 576 conv2d_61[0][0]

465__________________________________________________________________________________________________

466batch_normalization_64 (BatchNo (None, None, None, 1 576 conv2d_64[0][0]

467__________________________________________________________________________________________________

468batch_normalization_69 (BatchNo (None, None, None, 1 576 conv2d_69[0][0]

469__________________________________________________________________________________________________

470batch_normalization_70 (BatchNo (None, None, None, 1 576 conv2d_70[0][0]

471__________________________________________________________________________________________________

472activation_61 (Activation) (None, None, None, 1 0 batch_normalization_61[0][0]

473__________________________________________________________________________________________________

474activation_64 (Activation) (None, None, None, 1 0 batch_normalization_64[0][0]

475__________________________________________________________________________________________________

476activation_69 (Activation) (None, None, None, 1 0 batch_normalization_69[0][0]

477__________________________________________________________________________________________________

478activation_70 (Activation) (None, None, None, 1 0 batch_normalization_70[0][0]

479__________________________________________________________________________________________________

480mixed7 (Concatenate) (None, None, None, 7 0 activation_61[0][0]

481 activation_64[0][0]

482 activation_69[0][0]

483 activation_70[0][0]

484__________________________________________________________________________________________________

485conv2d_73 (Conv2D) (None, None, None, 1 147456 mixed7[0][0]

486__________________________________________________________________________________________________

487batch_normalization_73 (BatchNo (None, None, None, 1 576 conv2d_73[0][0]

488__________________________________________________________________________________________________

489activation_73 (Activation) (None, None, None, 1 0 batch_normalization_73[0][0]

490__________________________________________________________________________________________________

491conv2d_74 (Conv2D) (None, None, None, 1 258048 activation_73[0][0]

492__________________________________________________________________________________________________

493batch_normalization_74 (BatchNo (None, None, None, 1 576 conv2d_74[0][0]

494__________________________________________________________________________________________________

495activation_74 (Activation) (None, None, None, 1 0 batch_normalization_74[0][0]

496__________________________________________________________________________________________________

497conv2d_71 (Conv2D) (None, None, None, 1 147456 mixed7[0][0]

498__________________________________________________________________________________________________

499conv2d_75 (Conv2D) (None, None, None, 1 258048 activation_74[0][0]

500__________________________________________________________________________________________________

501batch_normalization_71 (BatchNo (None, None, None, 1 576 conv2d_71[0][0]

502__________________________________________________________________________________________________

503batch_normalization_75 (BatchNo (None, None, None, 1 576 conv2d_75[0][0]

504__________________________________________________________________________________________________

505activation_71 (Activation) (None, None, None, 1 0 batch_normalization_71[0][0]

506__________________________________________________________________________________________________

507activation_75 (Activation) (None, None, None, 1 0 batch_normalization_75[0][0]

508__________________________________________________________________________________________________

509conv2d_72 (Conv2D) (None, None, None, 3 552960 activation_71[0][0]

510__________________________________________________________________________________________________

511conv2d_76 (Conv2D) (None, None, None, 1 331776 activation_75[0][0]

512__________________________________________________________________________________________________

513batch_normalization_72 (BatchNo (None, None, None, 3 960 conv2d_72[0][0]

514__________________________________________________________________________________________________

515batch_normalization_76 (BatchNo (None, None, None, 1 576 conv2d_76[0][0]

516__________________________________________________________________________________________________

517activation_72 (Activation) (None, None, None, 3 0 batch_normalization_72[0][0]

518__________________________________________________________________________________________________

519activation_76 (Activation) (None, None, None, 1 0 batch_normalization_76[0][0]

520__________________________________________________________________________________________________

521max_pooling2d_4 (MaxPooling2D) (None, None, None, 7 0 mixed7[0][0]

522__________________________________________________________________________________________________

523mixed8 (Concatenate) (None, None, None, 1 0 activation_72[0][0]

524 activation_76[0][0]

525 max_pooling2d_4[0][0]

526__________________________________________________________________________________________________

527conv2d_81 (Conv2D) (None, None, None, 4 573440 mixed8[0][0]

528__________________________________________________________________________________________________

529batch_normalization_81 (BatchNo (None, None, None, 4 1344 conv2d_81[0][0]

530__________________________________________________________________________________________________

531activation_81 (Activation) (None, None, None, 4 0 batch_normalization_81[0][0]

532__________________________________________________________________________________________________

533conv2d_78 (Conv2D) (None, None, None, 3 491520 mixed8[0][0]

534__________________________________________________________________________________________________

535conv2d_82 (Conv2D) (None, None, None, 3 1548288 activation_81[0][0]

536__________________________________________________________________________________________________

537batch_normalization_78 (BatchNo (None, None, None, 3 1152 conv2d_78[0][0]

538__________________________________________________________________________________________________

539batch_normalization_82 (BatchNo (None, None, None, 3 1152 conv2d_82[0][0]

540__________________________________________________________________________________________________

541activation_78 (Activation) (None, None, None, 3 0 batch_normalization_78[0][0]

542__________________________________________________________________________________________________

543activation_82 (Activation) (None, None, None, 3 0 batch_normalization_82[0][0]

544__________________________________________________________________________________________________

545conv2d_79 (Conv2D) (None, None, None, 3 442368 activation_78[0][0]

546__________________________________________________________________________________________________

547conv2d_80 (Conv2D) (None, None, None, 3 442368 activation_78[0][0]

548__________________________________________________________________________________________________

549conv2d_83 (Conv2D) (None, None, None, 3 442368 activation_82[0][0]

550__________________________________________________________________________________________________

551conv2d_84 (Conv2D) (None, None, None, 3 442368 activation_82[0][0]

552__________________________________________________________________________________________________

553average_pooling2d_8 (AveragePoo (None, None, None, 1 0 mixed8[0][0]

554__________________________________________________________________________________________________

555conv2d_77 (Conv2D) (None, None, None, 3 409600 mixed8[0][0]

556__________________________________________________________________________________________________

557batch_normalization_79 (BatchNo (None, None, None, 3 1152 conv2d_79[0][0]

558__________________________________________________________________________________________________

559batch_normalization_80 (BatchNo (None, None, None, 3 1152 conv2d_80[0][0]

560__________________________________________________________________________________________________

561batch_normalization_83 (BatchNo (None, None, None, 3 1152 conv2d_83[0][0]

562__________________________________________________________________________________________________

563batch_normalization_84 (BatchNo (None, None, None, 3 1152 conv2d_84[0][0]

564__________________________________________________________________________________________________

565conv2d_85 (Conv2D) (None, None, None, 1 245760 average_pooling2d_8[0][0]

566__________________________________________________________________________________________________

567batch_normalization_77 (BatchNo (None, None, None, 3 960 conv2d_77[0][0]

568__________________________________________________________________________________________________

569activation_79 (Activation) (None, None, None, 3 0 batch_normalization_79[0][0]

570__________________________________________________________________________________________________

571activation_80 (Activation) (None, None, None, 3 0 batch_normalization_80[0][0]

572__________________________________________________________________________________________________

573activation_83 (Activation) (None, None, None, 3 0 batch_normalization_83[0][0]

574__________________________________________________________________________________________________

575activation_84 (Activation) (None, None, None, 3 0 batch_normalization_84[0][0]

576__________________________________________________________________________________________________

577batch_normalization_85 (BatchNo (None, None, None, 1 576 conv2d_85[0][0]

578__________________________________________________________________________________________________

579activation_77 (Activation) (None, None, None, 3 0 batch_normalization_77[0][0]

580__________________________________________________________________________________________________

581mixed9_0 (Concatenate) (None, None, None, 7 0 activation_79[0][0]

582 activation_80[0][0]

583__________________________________________________________________________________________________

584concatenate_1 (Concatenate) (None, None, None, 7 0 activation_83[0][0]

585 activation_84[0][0]

586__________________________________________________________________________________________________

587activation_85 (Activation) (None, None, None, 1 0 batch_normalization_85[0][0]

588__________________________________________________________________________________________________

589mixed9 (Concatenate) (None, None, None, 2 0 activation_77[0][0]

590 mixed9_0[0][0]

591 concatenate_1[0][0]

592 activation_85[0][0]

593__________________________________________________________________________________________________

594conv2d_90 (Conv2D) (None, None, None, 4 917504 mixed9[0][0]

595__________________________________________________________________________________________________

596batch_normalization_90 (BatchNo (None, None, None, 4 1344 conv2d_90[0][0]

597__________________________________________________________________________________________________

598activation_90 (Activation) (None, None, None, 4 0 batch_normalization_90[0][0]

599__________________________________________________________________________________________________

600conv2d_87 (Conv2D) (None, None, None, 3 786432 mixed9[0][0]

601__________________________________________________________________________________________________

602conv2d_91 (Conv2D) (None, None, None, 3 1548288 activation_90[0][0]

603__________________________________________________________________________________________________

604batch_normalization_87 (BatchNo (None, None, None, 3 1152 conv2d_87[0][0]

605__________________________________________________________________________________________________

606batch_normalization_91 (BatchNo (None, None, None, 3 1152 conv2d_91[0][0]

607__________________________________________________________________________________________________

608activation_87 (Activation) (None, None, None, 3 0 batch_normalization_87[0][0]

609__________________________________________________________________________________________________

610activation_91 (Activation) (None, None, None, 3 0 batch_normalization_91[0][0]

611__________________________________________________________________________________________________

612conv2d_88 (Conv2D) (None, None, None, 3 442368 activation_87[0][0]

613__________________________________________________________________________________________________

614conv2d_89 (Conv2D) (None, None, None, 3 442368 activation_87[0][0]

615__________________________________________________________________________________________________

616conv2d_92 (Conv2D) (None, None, None, 3 442368 activation_91[0][0]

617__________________________________________________________________________________________________

618conv2d_93 (Conv2D) (None, None, None, 3 442368 activation_91[0][0]

619__________________________________________________________________________________________________

620average_pooling2d_9 (AveragePoo (None, None, None, 2 0 mixed9[0][0]

621__________________________________________________________________________________________________

622conv2d_86 (Conv2D) (None, None, None, 3 655360 mixed9[0][0]

623__________________________________________________________________________________________________

624batch_normalization_88 (BatchNo (None, None, None, 3 1152 conv2d_88[0][0]

625__________________________________________________________________________________________________

626batch_normalization_89 (BatchNo (None, None, None, 3 1152 conv2d_89[0][0]

627__________________________________________________________________________________________________

628batch_normalization_92 (BatchNo (None, None, None, 3 1152 conv2d_92[0][0]

629__________________________________________________________________________________________________

630batch_normalization_93 (BatchNo (None, None, None, 3 1152 conv2d_93[0][0]

631__________________________________________________________________________________________________

632conv2d_94 (Conv2D) (None, None, None, 1 393216 average_pooling2d_9[0][0]

633__________________________________________________________________________________________________

634batch_normalization_86 (BatchNo (None, None, None, 3 960 conv2d_86[0][0]

635__________________________________________________________________________________________________

636activation_88 (Activation) (None, None, None, 3 0 batch_normalization_88[0][0]

637__________________________________________________________________________________________________

638activation_89 (Activation) (None, None, None, 3 0 batch_normalization_89[0][0]

639__________________________________________________________________________________________________

640activation_92 (Activation) (None, None, None, 3 0 batch_normalization_92[0][0]

641__________________________________________________________________________________________________

642activation_93 (Activation) (None, None, None, 3 0 batch_normalization_93[0][0]

643__________________________________________________________________________________________________

644batch_normalization_94 (BatchNo (None, None, None, 1 576 conv2d_94[0][0]

645__________________________________________________________________________________________________

646activation_86 (Activation) (None, None, None, 3 0 batch_normalization_86[0][0]

647__________________________________________________________________________________________________

648mixed9_1 (Concatenate) (None, None, None, 7 0 activation_88[0][0]

649 activation_89[0][0]

650__________________________________________________________________________________________________

651concatenate_2 (Concatenate) (None, None, None, 7 0 activation_92[0][0]

652 activation_93[0][0]

653__________________________________________________________________________________________________

654activation_94 (Activation) (None, None, None, 1 0 batch_normalization_94[0][0]

655__________________________________________________________________________________________________

656mixed10 (Concatenate) (None, None, None, 2 0 activation_86[0][0]

657 mixed9_1[0][0]

658 concatenate_2[0][0]

659 activation_94[0][0]

660__________________________________________________________________________________________________

661global_average_pooling2d_1 (Glo (None, 2048) 0 mixed10[0][0]

662__________________________________________________________________________________________________

663dense_1 (Dense) (None, 1024) 2098176 global_average_pooling2d_1[0][0]

664__________________________________________________________________________________________________

665dense_2 (Dense) (None, 2) 2050 dense_1[0][0]

666==================================================================================================

667Total params: 23,903,010

668Trainable params: 2,100,226

669Non-trainable params: 21,802,784

670__________________________________________________________________________________________________

Phần train lại sẽ có khoảng hơn 2 triệu tham số, phần layter ở trước đó không train là khoảng 21 triệu tham số.

Đồ hình của model (các bạn có thể download về rồi zoom bự lên để xem rõ hơn).

Chia tập dữ liệu ra thành 5 phần, 4 phần làm tập train, 1 phần làm tập validation.

1X, y, tags = dataset.dataset(data_directory, n)

2nb_classes = len(tags)

3

4

5sample_count = len(y)

6train_size = sample_count * 4 // 5

7X_train = X[:train_size]

8y_train = y[:train_size]

9Y_train = np_utils.to_categorical(y_train, nb_classes)

10X_test = X[train_size:]

11y_test = y[train_size:]

12Y_test = np_utils.to_categorical(y_test, nb_classes)

Để chống overfit, chúng ta sẽ thêm một số yếu tố như thực hiện các phép biến đổi affine trên ảnh gốc.

1datagen = ImageDataGenerator(

2 featurewise_center=False,

3 samplewise_center=False,

4 featurewise_std_normalization=False,

5 samplewise_std_normalization=False,

6 zca_whitening=False,

7 rotation_range=45,

8 width_shift_range=0.25,

9 height_shift_range=0.25,

10 horizontal_flip=True,

11 vertical_flip=False,

12 zoom_range=0.5,

13 channel_shift_range=0.5,

14 fill_mode='nearest')

15

16datagen.fit(X_train)

Cuối cùng, chúng ta sẽ xây dựng mô hình và tiến hành huấn luyện, lưu mô hình. Quá trình này tốn hơi nhiều thời gian.

1

2model = net.build_model(nb_classes)

3model.compile(optimizer='rmsprop', loss='categorical_crossentropy', metrics=["accuracy"])

4

5# train the model on the new data for a few epochs

6

7print("training the newly added dense layers")

8

9samples_per_epoch = X_train.shape[0]//batch_size*batch_size

10steps_per_epoch = samples_per_epoch//batch_size

11validation_steps = X_test.shape[0]//batch_size*batch_size

12

13model.fit_generator(datagen.flow(X_train, Y_train, batch_size=batch_size, shuffle=True),

14 samples_per_epoch=samples_per_epoch,

15 epochs=nb_epoch,

16 steps_per_epoch = steps_per_epoch,

17 validation_data=datagen.flow(X_test, Y_test, batch_size=batch_size),

18 validation_steps=validation_steps,

19 )

20

21

22net.save(model, tags, model_file_prefix)

Độ chính xác trên tập train.

1Y_pred = model.predict(X_test, batch_size=batch_size)

2y_pred = np.argmax(Y_pred, axis=1)

3

4accuracy = float(np.sum(y_test==y_pred)) / len(y_test)

5print("accuracy: ", accuracy)

6

7confusion = np.zeros((nb_classes, nb_classes), dtype=np.int32)

8for (predicted_index, actual_index, image) in zip(y_pred, y_test, X_test):

9 confusion[predicted_index, actual_index] += 1

10

11print("rows are predicted classes, columns are actual classes")

12for predicted_index, predicted_tag in enumerate(tags):

13 print(predicted_tag[:7], end='', flush=True)

14 for actual_index, actual_tag in enumerate(tags):

15 print("\t%d" % confusion[predicted_index, actual_index], end='')

16 print("", flush=True)

1accuracy: 0.9907213167661771

2rows are predicted classes, columns are actual classes

3cat 12238 106

4dog 124 12320

Kết quả đạt 0.99 trên tập train, khá tốt phải không các bạn.

Các bạn có thể download mô hình mình đã huấn luyện ở https://drive.google.com/open?id=1qQo8gj3KA6c1rPmJMVS_FZkVDcDmRgSf.

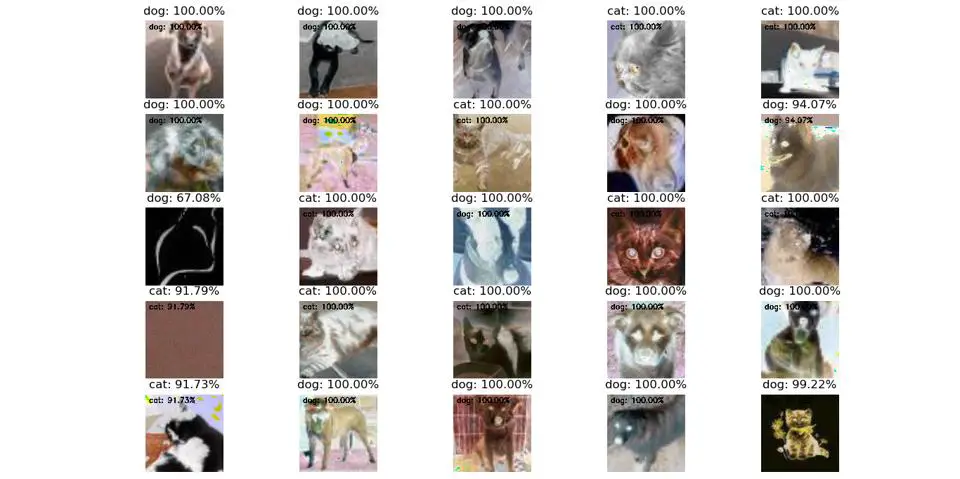

Thử show ra kết quả trên tập test xem như thế nào.

1Y_pred = model.predict(X_test, batch_size=batch_size)

2y_pred = np.argmax(Y_pred, axis=1)

3

4lst_img = []

5

6columns = 5

7rows = 5

8# fig,= plt.figure(rows)

9for idx, val in enumerate(X_test):

10 pred =y_pred[idx]

11 label = "{}: {:.2f}%".format(tags[pred], Y_pred[idx][pred] * 100)

12 image = dataset.reverse_preprocess_input(val)

13 image = cv2.cvtColor(image,cv2.COLOR_BGR2RGB)

14 cv2.putText(image,label , (10, 25), cv2.FONT_HERSHEY_SIMPLEX,0.7, (255, 000, 0), 2)

15

16 plt.subplot(rows,rows,idx+1)

17 plt.imshow(image)

18 plt.title(label)

19 plt.axis('off')

20

21plt.show()

Kết quả có một số hình mèo bị nhận nhầm là chó, và một số hình không phải mèo, không phải chó. Nhìn chung kết quả cũng không đến nỗi nào quá tệ.

Quậy phá mô hình

Mô hình InceptionV3 chúng ta đang xài có tổng cộng 311 lớp, chúng ta sẽ tiến hành một số pha quậy phá mô hình xem kết quả như trả ra như thế nào

Quậy phá 1: Mở đóng băng một số lớp cuối và train trên chúng.

Nếu các bạn để ý kỹ, trong đoạn mã nguồn của mình có đoạn

1# first: train only the top layers (which were randomly initialized)

2 # i.e. freeze all convolutional InceptionV3 layers

3 for layer in base_model.layers:

4 layer.trainable = False

Nghĩa là mình đóng băng toàn bộ 311 lớp, không cho nó train mà chỉ lấy kết quả của nó train lớp softmax cuối cùng. Bây giờ mình sẽ thử nghiệm với việc là để 299 lớp ban đầu vẫn đóng băng, và train lại toàn bộ các lớp còn lại (Các bạn đừng thắc mắc vì sao lại là 299 nha, do mình thích thôi).

1for layer in model.layers[:299]:

2 layer.trainable = False

3for layer in model.layers[299:]:

4 layer.trainable = True

Đồ hình của mô đồ khá giống ở trên, mình chỉ post lại kết quả của số param.

1==================================================================================================

2Total params: 23,903,010

3Trainable params: 2,493,954

4Non-trainable params: 21,409,056

5__________________________________________________________________________________________________

Như vậy là có khoảng 2 triệu 5 tham số được huấn luyện lại

Model của mình huấn luyện được các bạn có thể download ở https://drive.google.com/open?id=1Ts18LICUAh6gcOnXcmuVr7PUG5IxpCdt.

Kết quả đạt được:

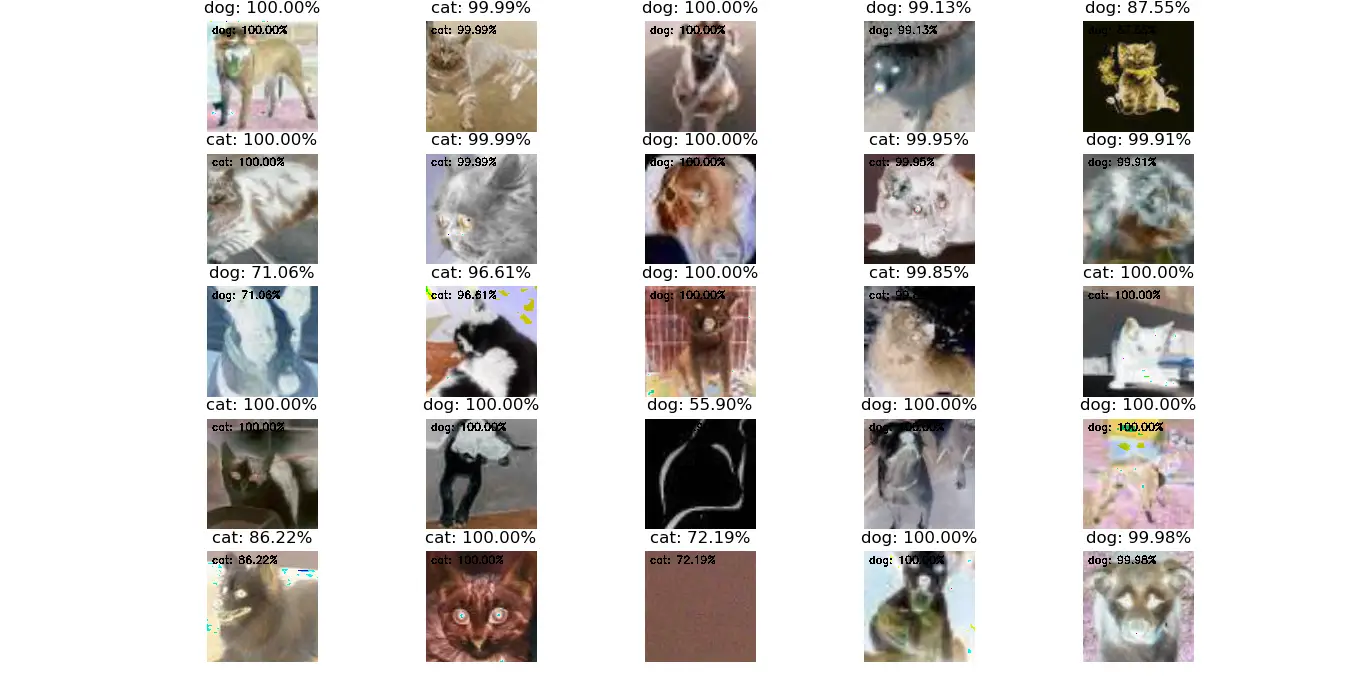

1accuracy: 0.9834610730133119

2rows are predicted classes, columns are actual classes

3cat 2429 69

4dog 13 2447

Kết quả 25 hình ngẫu nhiên cũng khá giống kết quả ở trước đó. Một số hình không có con vật bị nhận nhầm như hình còn mèo ở góc phải trên bị nhận nhầm là chó. Tuy nhiên, với chất lượng hình ảnh như thế này thì mình thấy kết quả như vậy là khá tuyệt vời.

Quậy phá 2: Chỉ sử dụng 72 lớp đầu tiên của inception.

Ở lần thí nghiệm này, mình sẽ chỉ sử dụng 72 lớp đầu tiên của inception để huấn luyện. Mình sẽ sửa lại một xíu ở hàm build model như sau:

1x = base_model.layers[72].output

Một lưu ý nhỏ là do inception không có tính tuần tự giữa các lớp (các bạn có thể nhìn hình ở trên sẽ thấy rõ), nên index sẽ không phải là 72 như thông thường.

Tiếp theo, chúng ta sẽ thực hiện việc huấn luyện lại mô hình và kết quả là:

1accuracy: 0.5494150867285196

2rows are predicted classes, columns are actual classes

3cat 339 131

4dog 2103 2385

Kết quả khá tệ, lý do là mô hình các layer không theo sequence, mình lấy ngẫu nhiêu 72 lớp làm thông tin feature của các hình bị mất mát nhiều (ví dụ trường hợp layey 80 là tổng hợp thông tin của layter 79 + layter 4 + layer 48, mà mình chỉ lấy 72 layter đầu, nên sẽ mất đi phần đóng góp cực kỳ quan trọng của layter 4 và 48 ở lớp cao hơn).

Cảm ơn các bạn đã theo dõi. Hẹn gặp bạn ở các bài viết tiếp theo.

Comments